Prompt Engineering Guidelines

Warning

This guide is based on an early version of RapidGPT and includes certain limitations. Users are encouraged to verify the provided information for accuracy and relevance to their specific needs.

Note

PrimisAI has highlighted best practices to optimize RapidGPT's performance and to minimize these limitations. The guide presents the current limitations of RapidGPT, followed by common use cases and prompt engineering tips to achieve high-quality output.

Limitations

Hallucinations

RapidGPT, like many advanced language models, can occasionally generate information that is plausible-sounding but incorrect. This phenomenon, known as "hallucination," means that the model might produce answers or statements that do not align with factual data or the intended context. Users should verify critical information provided by RapidGPT and cross-reference it with trusted sources to ensure accuracy. This limitation highlights the importance of human oversight and the need for careful review of the model's outputs in high-stakes or sensitive applications.

Dependency on Quality and Clarity of Prompts

RapidGPT's performance is highly dependent on the quality and clarity of the prompts provided by the user. Vague or poorly structured prompts can lead to ambiguous or irrelevant responses. For optimal performance, users should provide detailed, specific, and well-structured prompts. This limitation underscores the importance of prompt engineering, where crafting precise questions or instructions can significantly enhance the relevance and accuracy of the model’s outputs.

Context Window

RapidGPT has a context window limit of 8096 tokens. This means that the model can process and retain up to 8096 tokens (words and characters combined) of input and output data at a time. When the input exceeds this limit, earlier content may be truncated or ignored, which can affect the model's ability to reference previous parts of the conversation or document accurately. Users should be mindful of this limitation and consider breaking down larger inputs into smaller, manageable chunks to ensure that all relevant information is considered by the model.

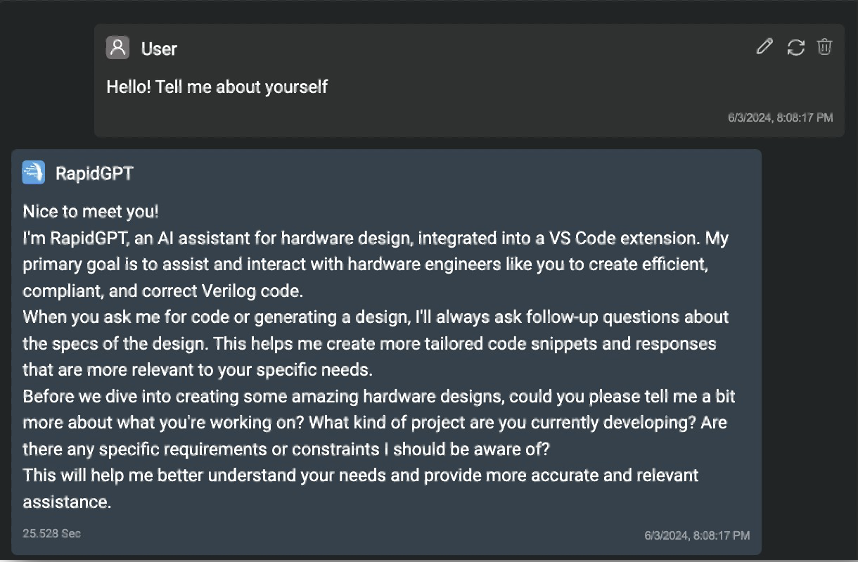

From RapidGPT Itself

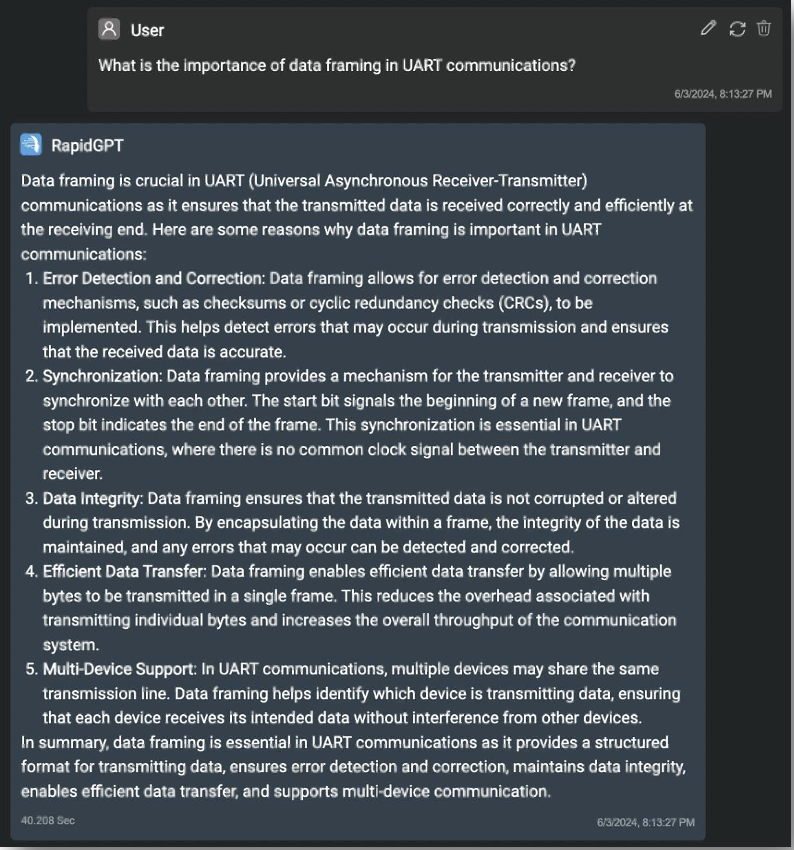

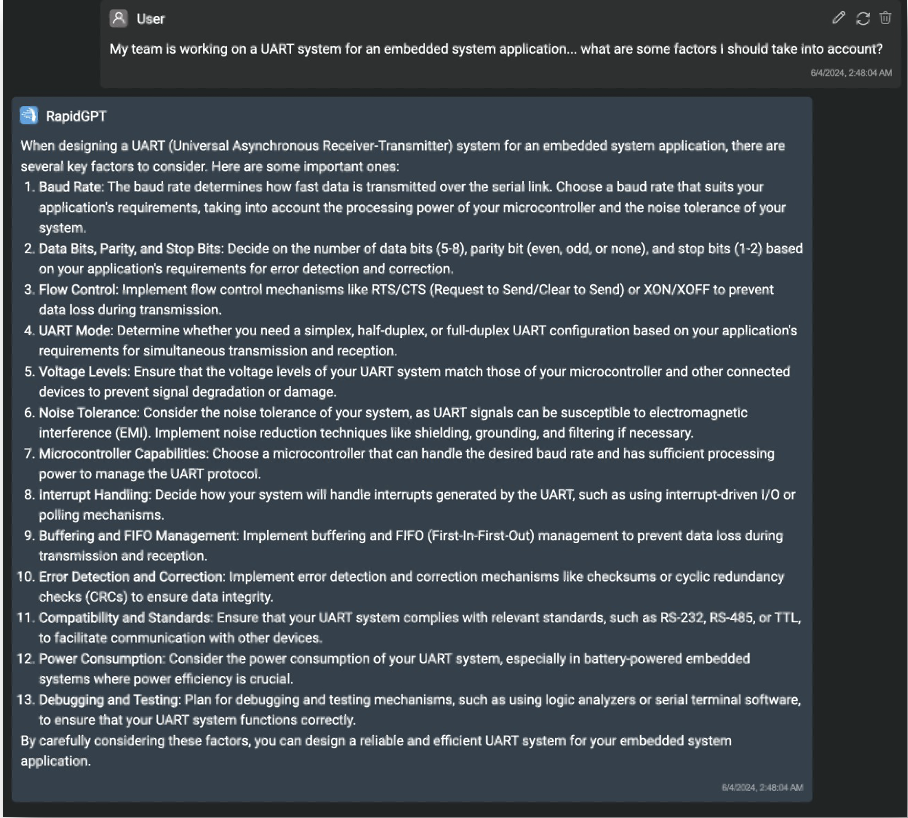

Common Usage: General Hardware Knowledge

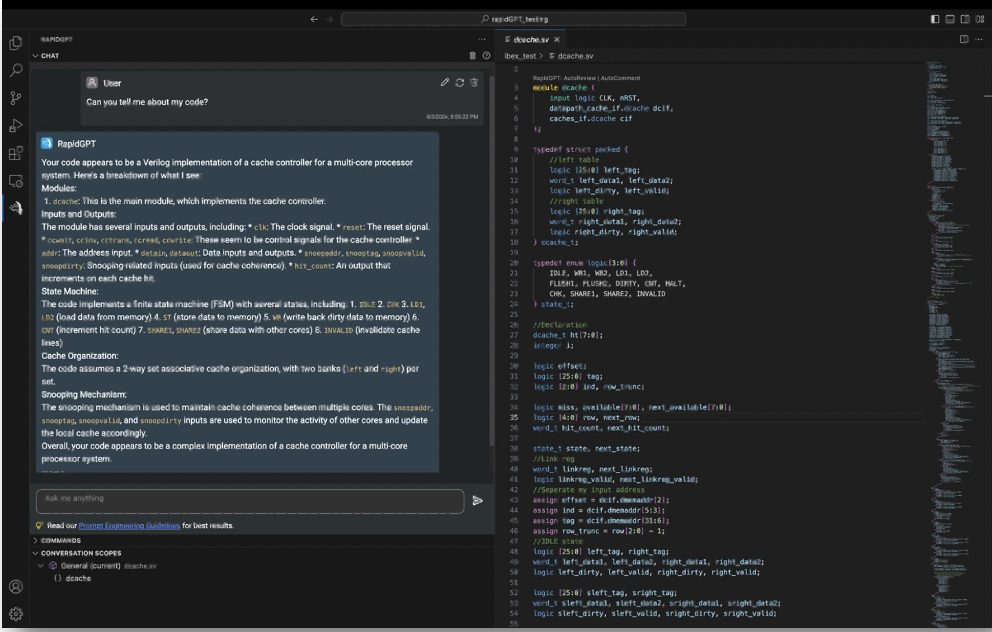

Common Usage: Code Analysis

Looking at the contents of the opened code window, RapidGPT can provide detailed insights on your current project.

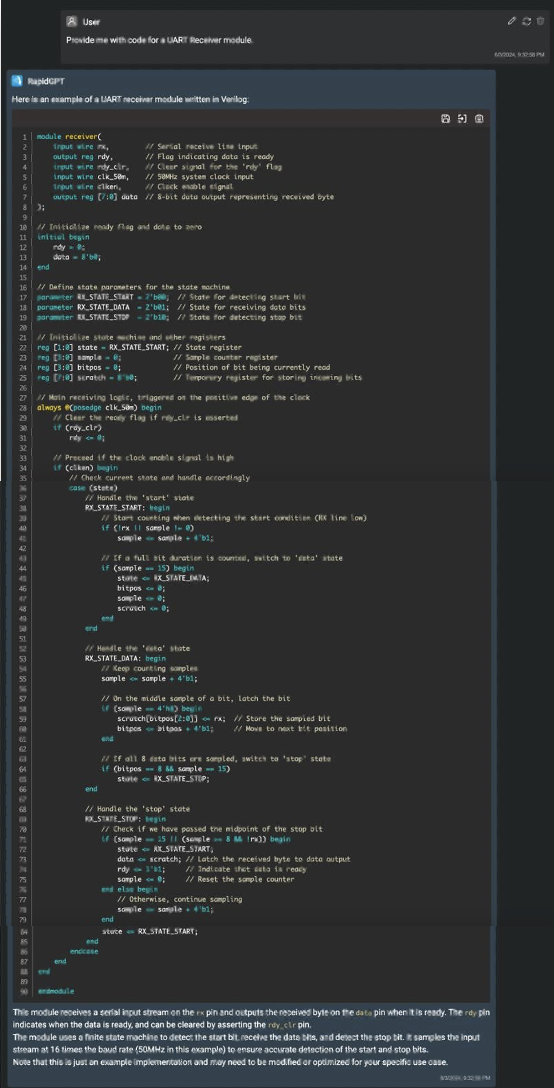

Common Usage: RTL Code Generation

Prompting RapidGPT for RTL will provide a code block, easily paste-able into your code window.

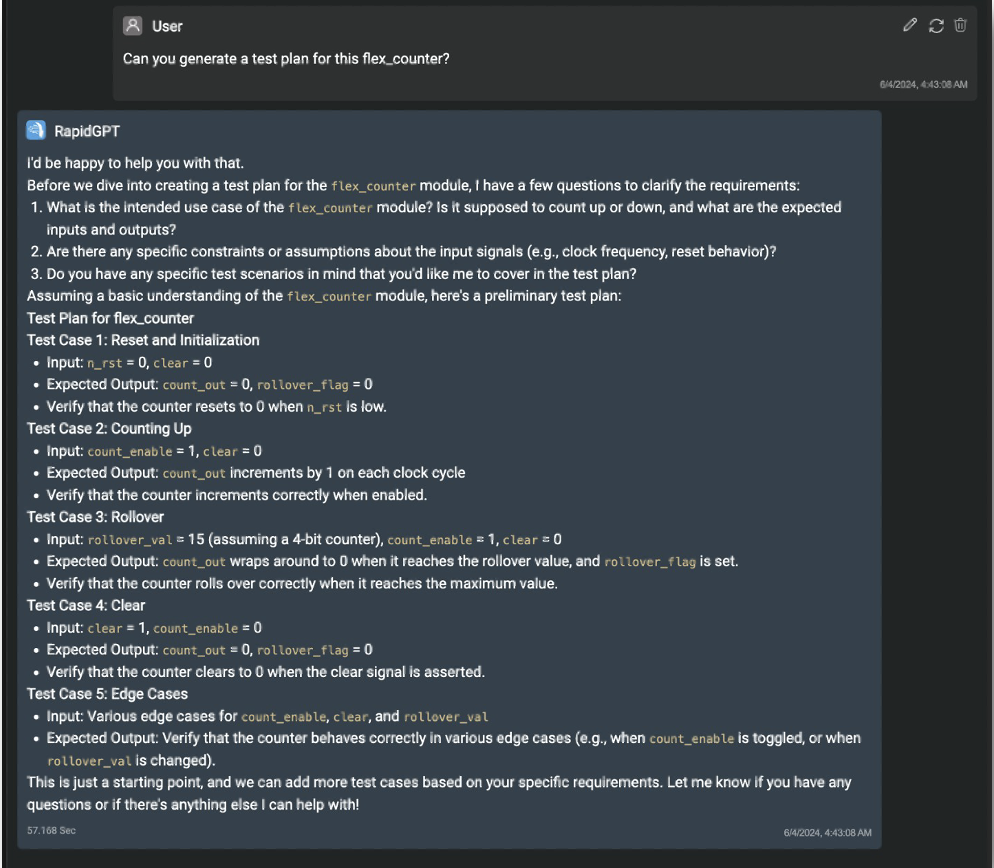

Common Usage: Test Plan Generation

RapidGPT can assist with verification by outlining a comprehensive testing plan for a given module.

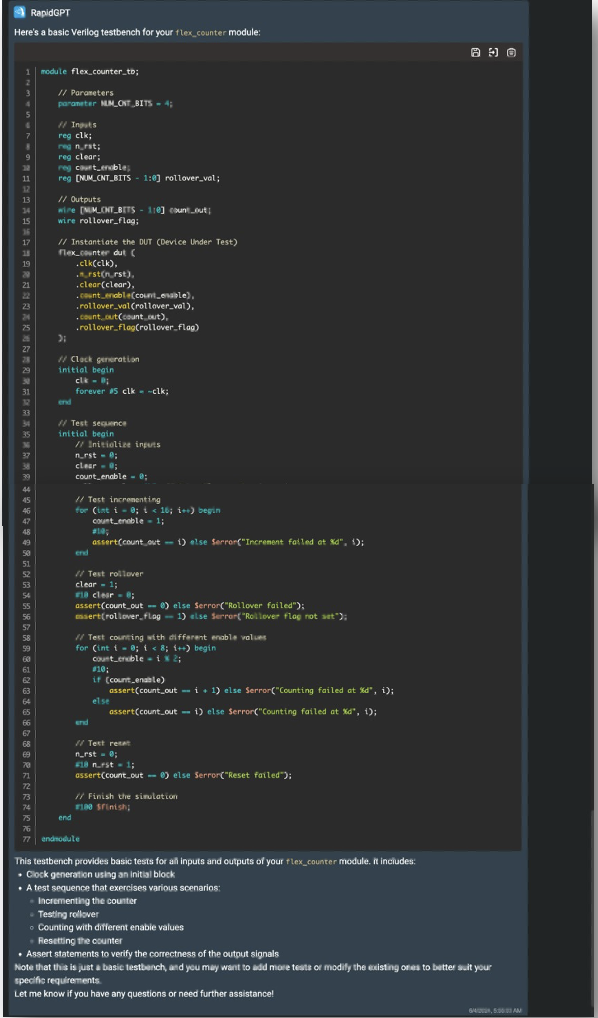

Common Usage: Testbench Code Generation

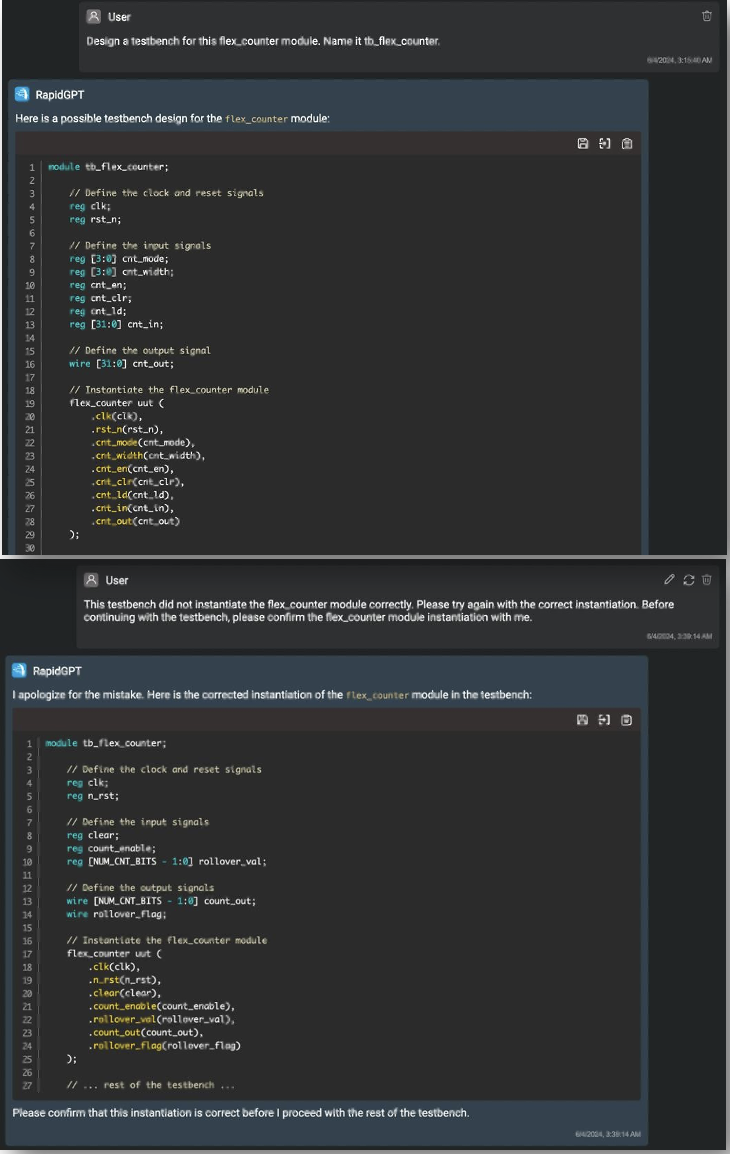

Following the developed testing plan, RapidGPT can convert use the plan into testbench code.

Prompt Engineering Tips

Requests to RapidGPT may often fall within the categories mentioned above. However, as RapidGPT is prone to hallucinations and errors, the following tips and guidelines can help steer RapidGPT in the right direction and achieve your goals.

By crafting well-designed prompts, you can get more accurate and relevant responses from the AI model.

All the tips mentioned below can be used together to enhance RapidGPT understanding of a given hardware task and produce quality RTL. Doing so builds RapidGPT awareness and improves the success rate of this next token prediction technology.

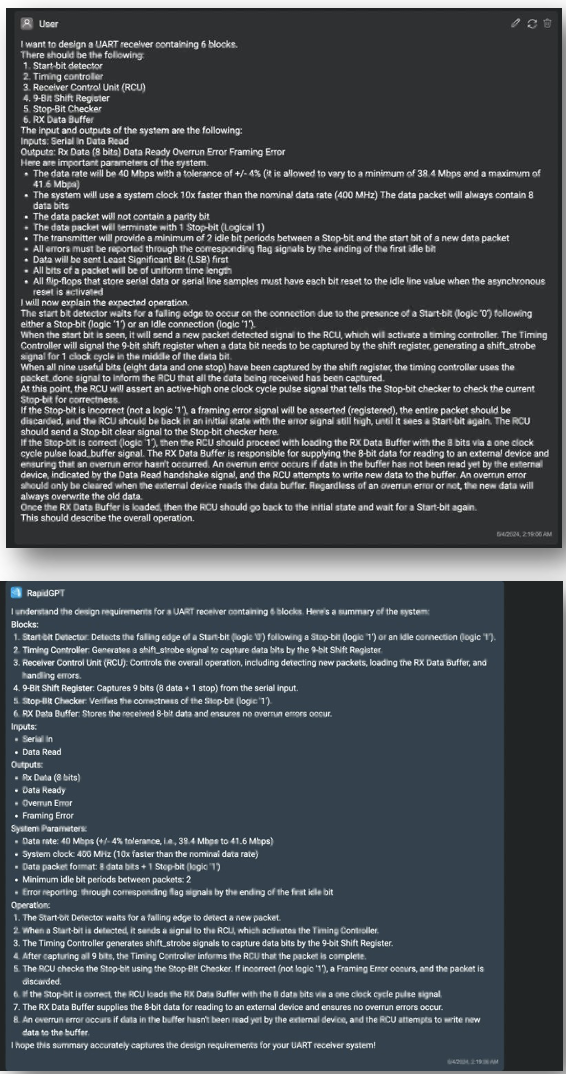

Prompt Engineering Tips: Providing Context

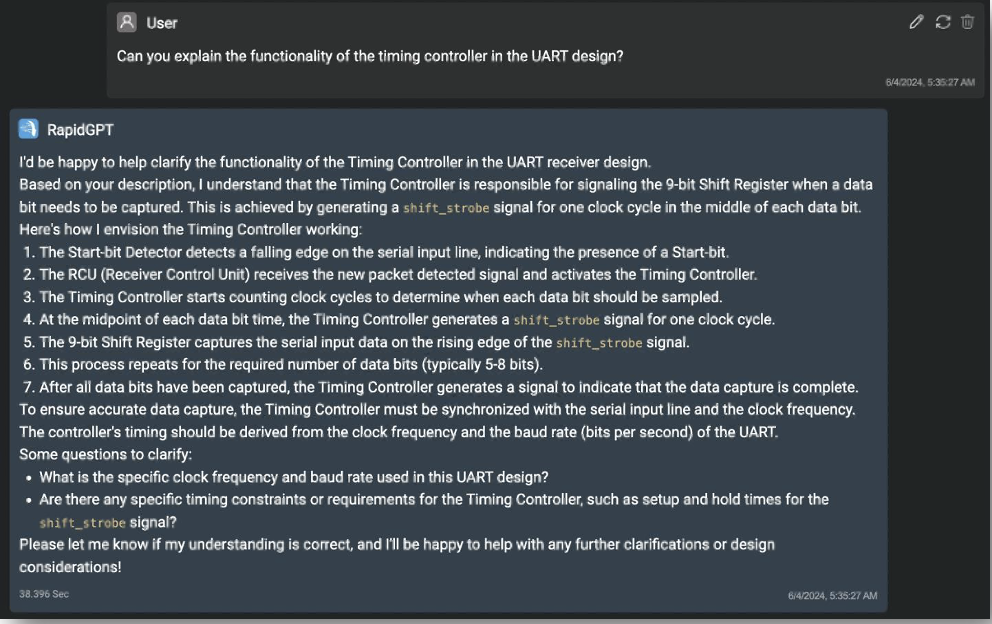

Context enables RapidGPT to comprehensively understand and effectively address the underlying goal.

Prompt Engineering Tips: Be Specific

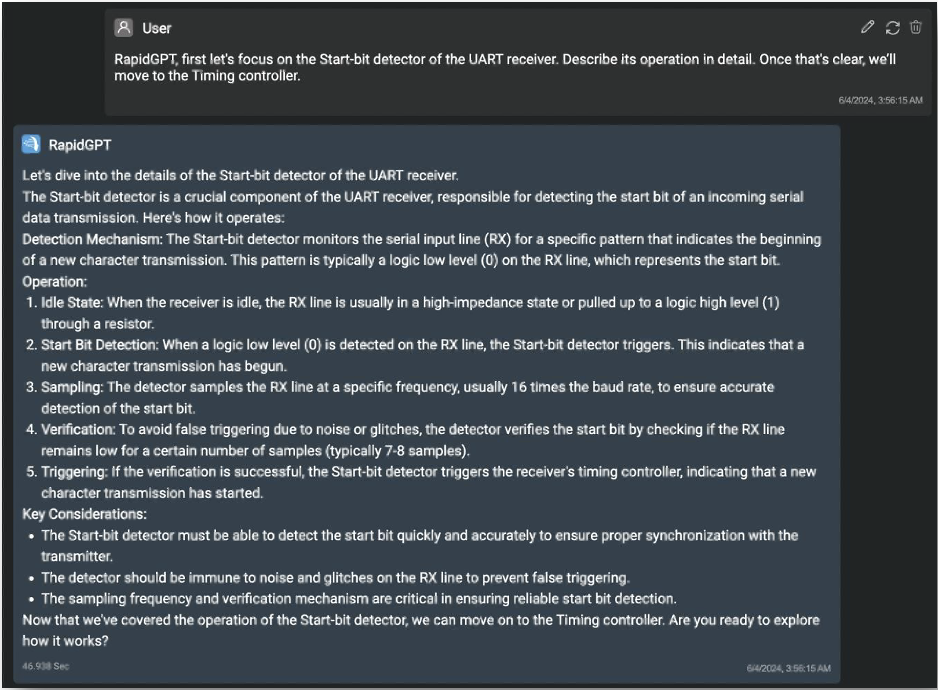

Whether that be providing detailed specifications for a code generation prompt, or requesting a slight modification to existing code, articulating specifics typically results in more clear-cut responses from RapidGPT and removes ambiguity.

Prompt Engineering Tips: Request Re-Iteration

Asking RapidGPT to explain its understanding on a hardware design after you have explained it is an easy way for it to self-reinforce the concepts through articulation.

Prompt Engineering Tips: Operate Progressively

Working or revealing information progressively can manage complexity and guide RapidGPT through step-by-step reasoning.

Prompt Engineering Tips: Clarify As Needed

Often times, RapidGPT will not behave as expected, and user clarification will be required to get the conversation back on track.

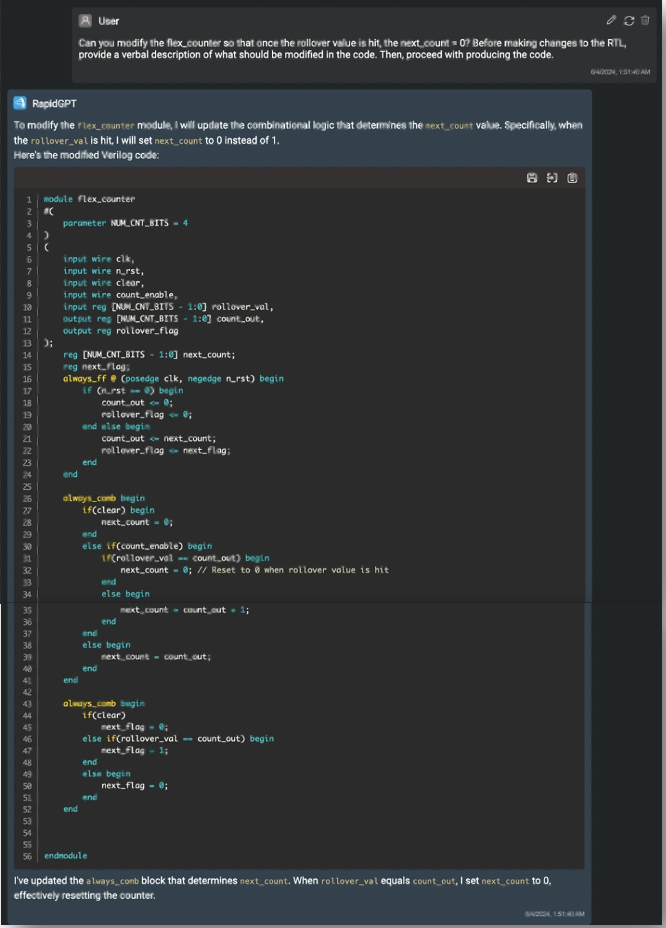

Prompt Engineering Tips: Enforce "System 2" Thinking

System 1 thinking is a near-instantaneous process; it happens automatically, intuitively, and with little effort. It’s driven by instinct and experiences. On the other hand, System 2 thinking is slower and requires more effort. It is more conscious-like and logical. We can push RapidGPT into System 2 thinking through creative prompts. The example below demonstrates RapidGPT “thinking” how it should adjust RTL changes, before doing so. We’ve found this to be a powerful technique for higher quality results.